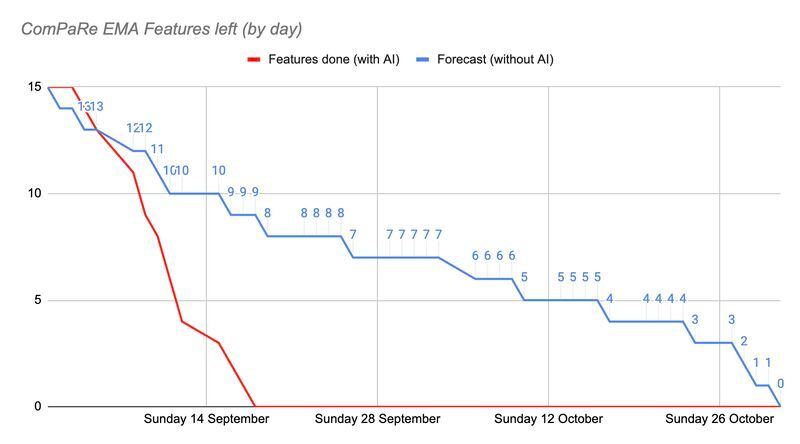

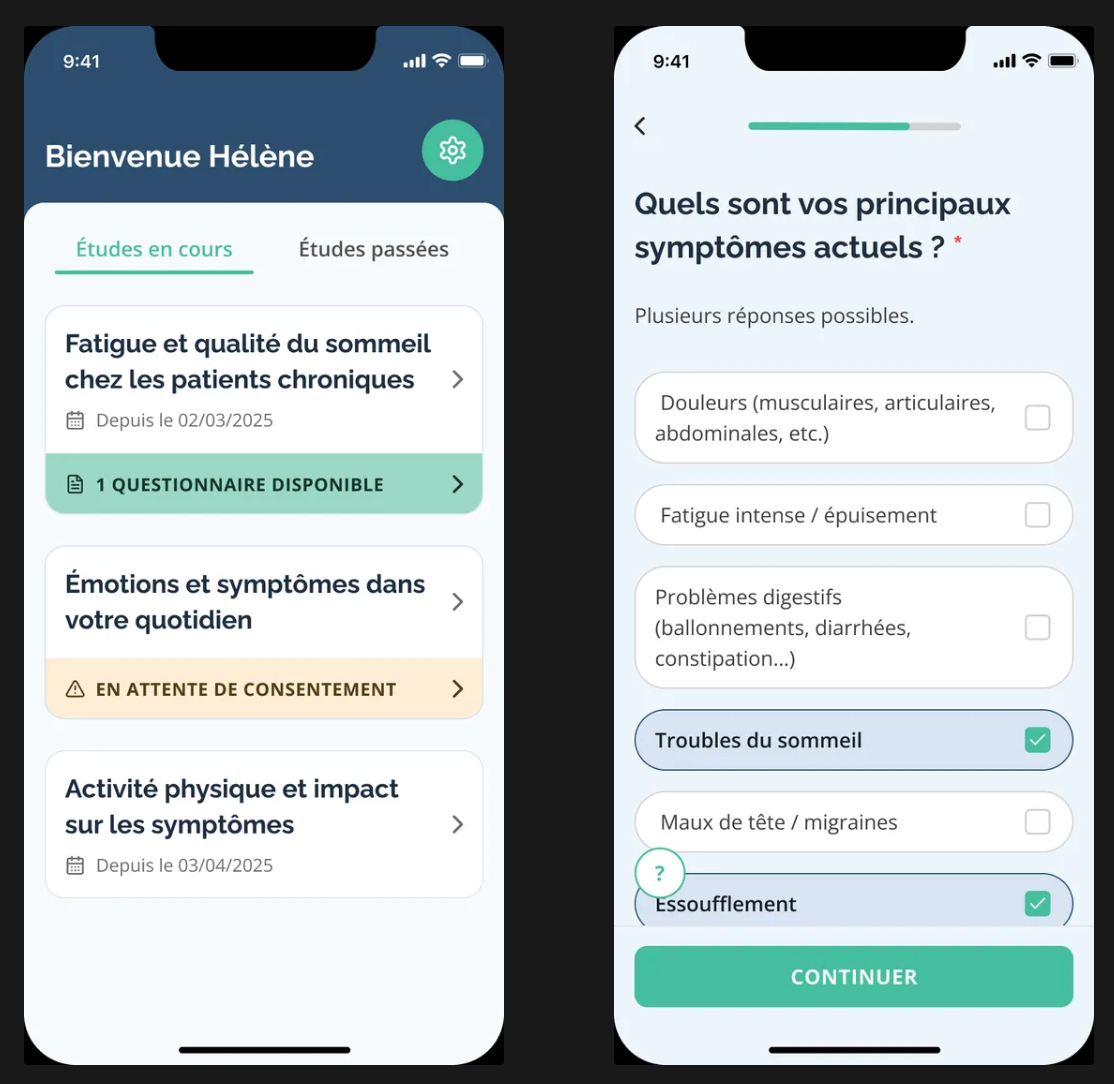

In three weeks, I pushed 15 brand-new features into production for ComPaRe EMA, the mobile app we’re building for AP‑HP.

Without LLM agents, that same backlog would have taken two months to develop.

Without AI the team estimated 2 months-dev needed to ship to production the 15 features left

This isn’t about typing speed. It’s not “Copilot writes code so I don’t have to.”

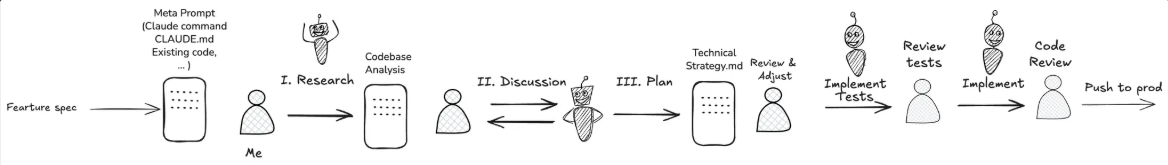

The real shift is a new way of designing, coding, and managing software projects — what I call Right-First-Time Agentic Coding. (a bit longer than “Vibe planning”). In this model, conception dominates completion: you engineer the whole flow with an AI agent before you write a single line of code.

As a CTO, I deliberately stepped back into the role of developer on this project. Because you can’t manage, train, or make strategic calls about AI-assisted development if you haven’t lived through the details yourself. Buying Copilot licenses for everyone and “waiting to see” is a recipe for mediocrity. There is no better way to understand the future of coding, than by experimenting yourself.

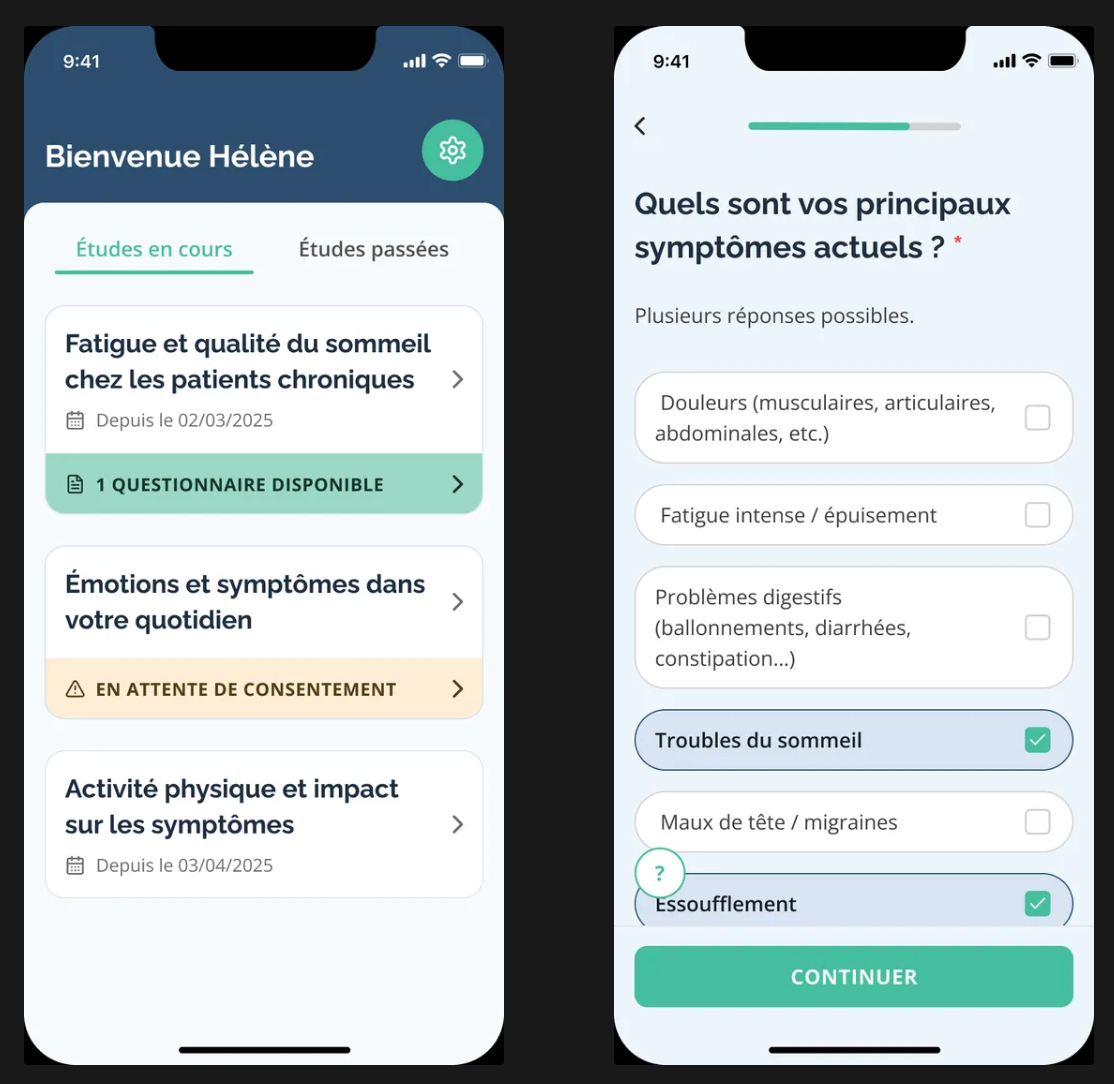

ComPaRe EMA is capturing contextual insights on daily living with a chronic disease to foster research.

Let’s talk about productivity

“AI productivity” stats are often illusionary, they measure speed, not value.

Take for instance the headline that “AI boosts commits by 17 %”.

If your customer stills receives the same app on the same date with identical quality, that 17% is a 0% productivity gain.

In fact, the illusion of faster typing can backfire: studies show that teams who rely on AI for “speed” sometimes experience longer lead times on real Open Source repo issues.

According to the lean tech manifesto, what matter most is the Value for the Customer. So in lean terms productivity = value delivered ÷ cost.

For my customer (AP-HP), value means:

Lead time — do they get their app faster to start research earlier ?

Better research insights — does the app collect more diverse or better data (less bias, better UX, stronger performance), so researchers can advance their work faster?

For Theodo, cost translates to man-hours and resource allocation needed to achieve same quality.

So what should I measure ?

Conception to production-time : How fast we move features from idea to user hands.

Rework rate : How many times we avoid rework (defects, bugs, rejected features).

External bottleneck time : Waiting for non‑development gatekeepers (App Store review, QA validation, 3rd-party APIs support, …)

With that framing, let’s look at how LLM agents really changed my productivity.

1. Conception > Completion : the real productivity gains comes from better Design, not faster typing

I started experimenting this method end of august, right before Github announced its “Spec Driven Development” (Sept 2nd 2025). The two approaches line up pretty well, so I’ll focus on what worked for me

The biggest wins didn’t come from writing code faster, but from designing a feature right first time so it’s ready to ship.

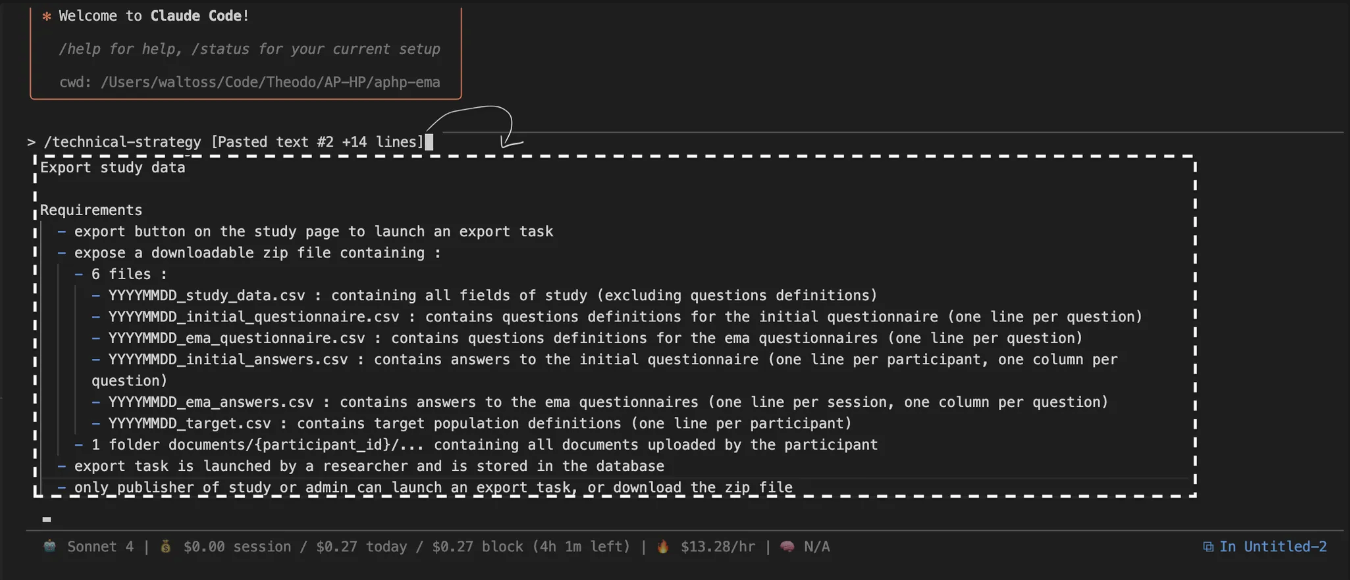

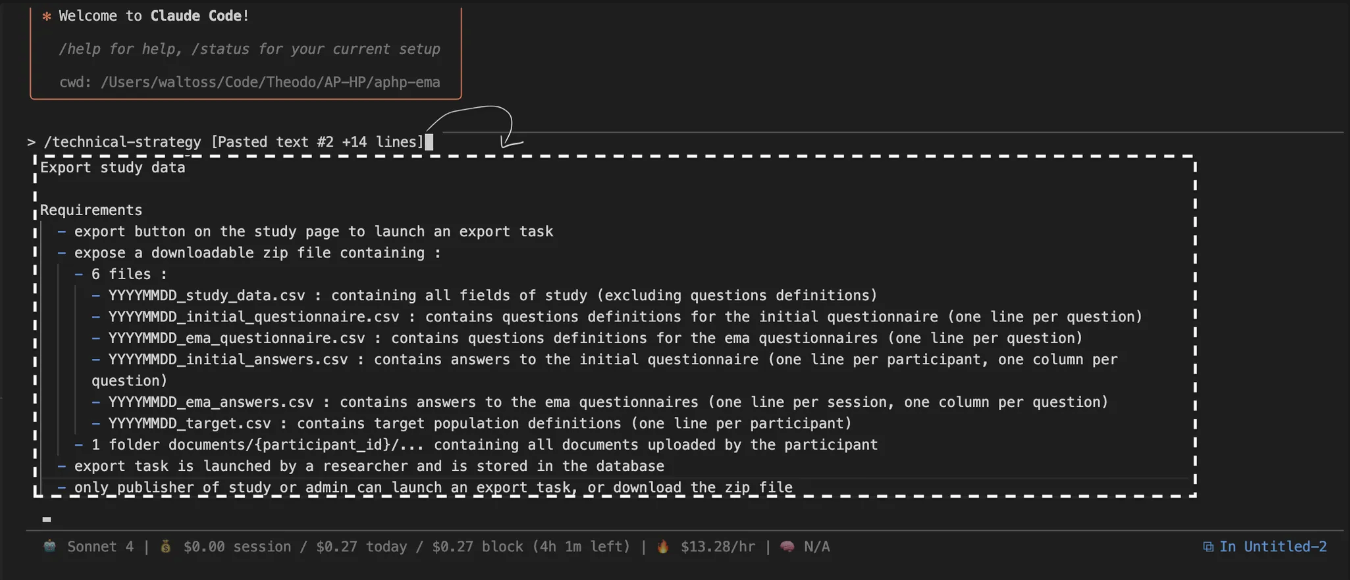

The /technical-strategy claude command

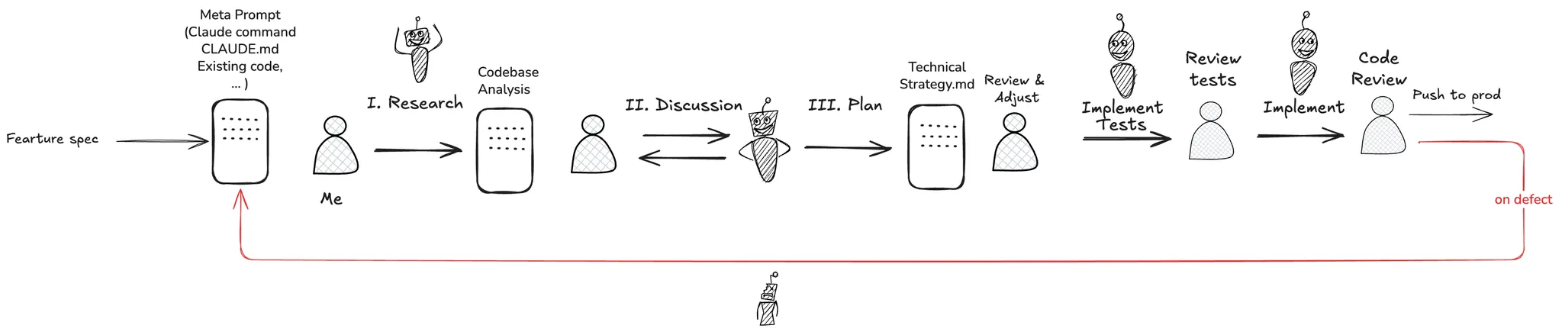

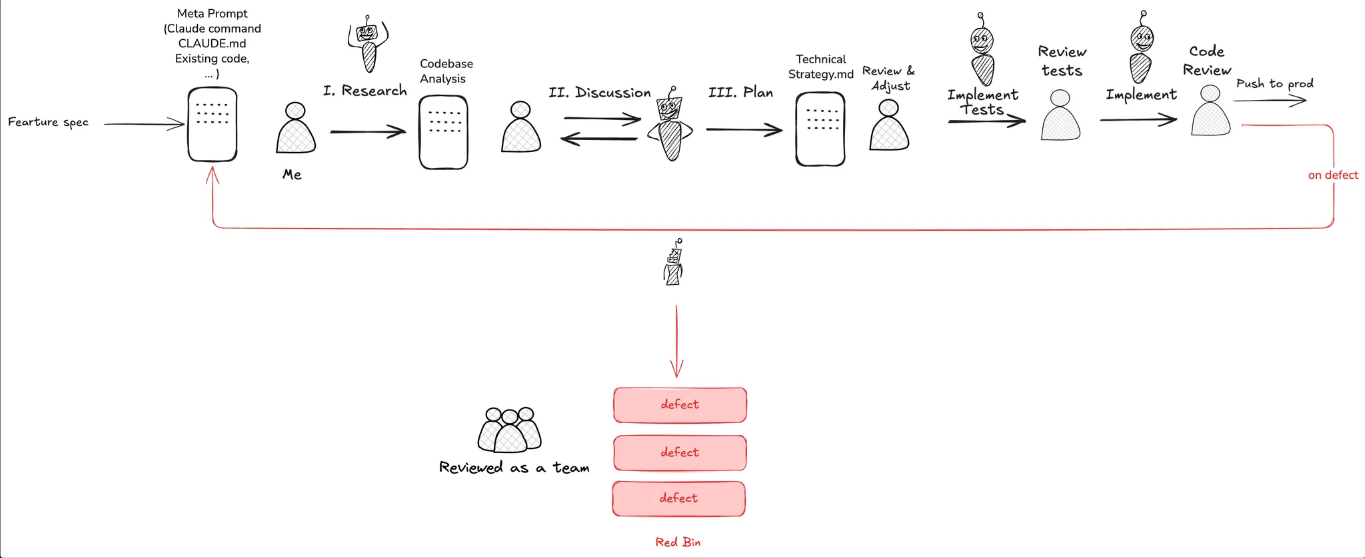

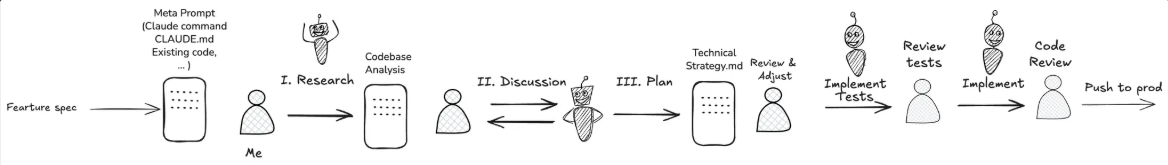

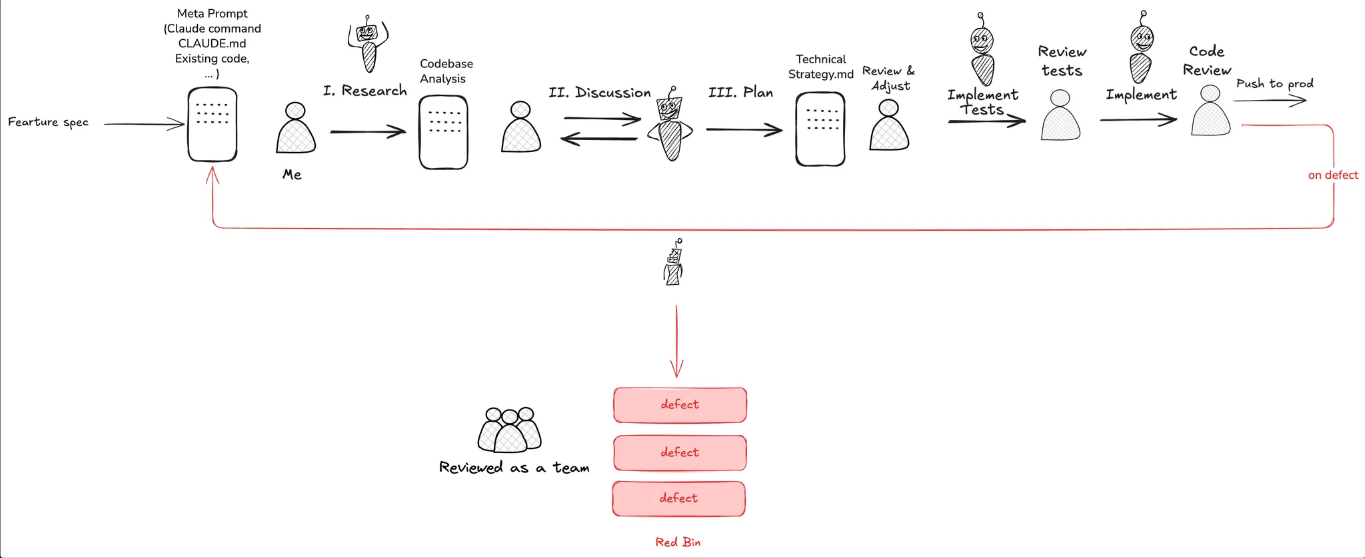

At the heart of my workflow is a single slash command (adapted from the initiative of François Hendriks 🙏) that orchestrates the entire feature‑design cycle. It breaks the process in 3 phases :

Phase 1 : Codebase exploration

Phase 2 : Targeted questions & discussion

Phase 3 : Technical strategy redaction

Below I walk through each phase using the Export‑Study feature from ComPaRe as a running example.

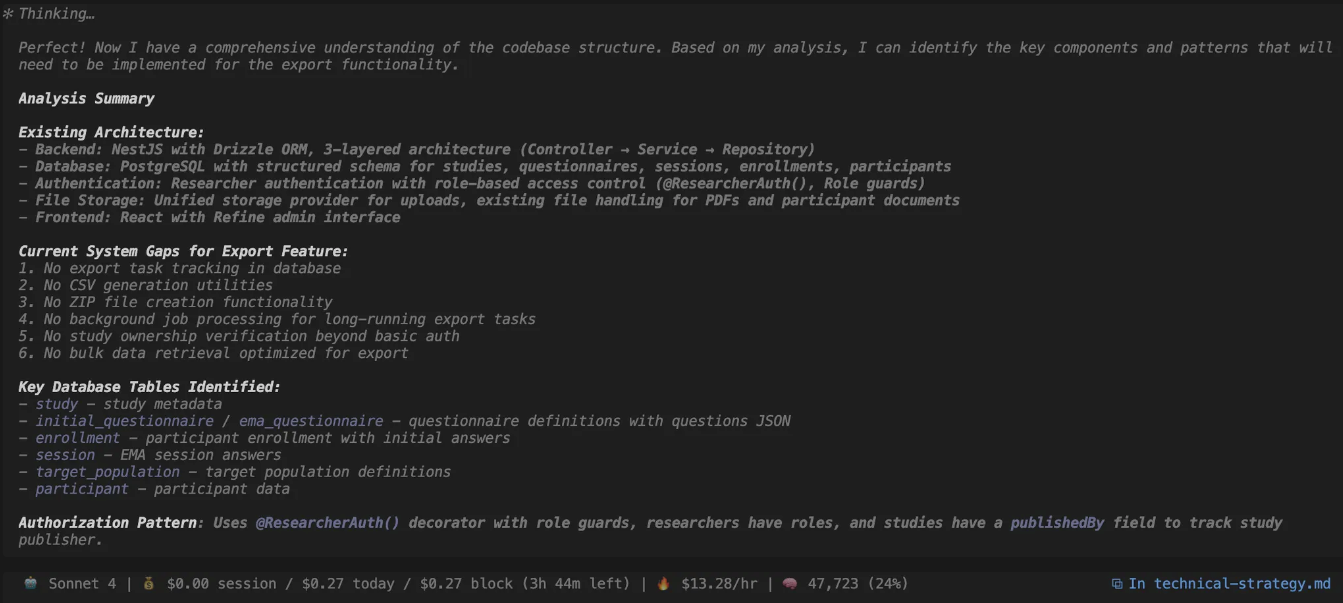

Phase 1 : Codebase exploration

The performance of an agent all comes down to context engineering. In this first phase, Claude must build its own context for the following steps. My job is to constantly represent myself this context by asking these questions :

Do I have enough context space left for the remaining work ? (≈ 200k tokens)

Is the context poisoned, ie does it contains tokens that reduce the likelihood of success ?

Conversely, does it lack important information from the codebase, are any critical token missing?

https://ccusage.com/guide/statusline is one of the option to have a constant glance on your context window

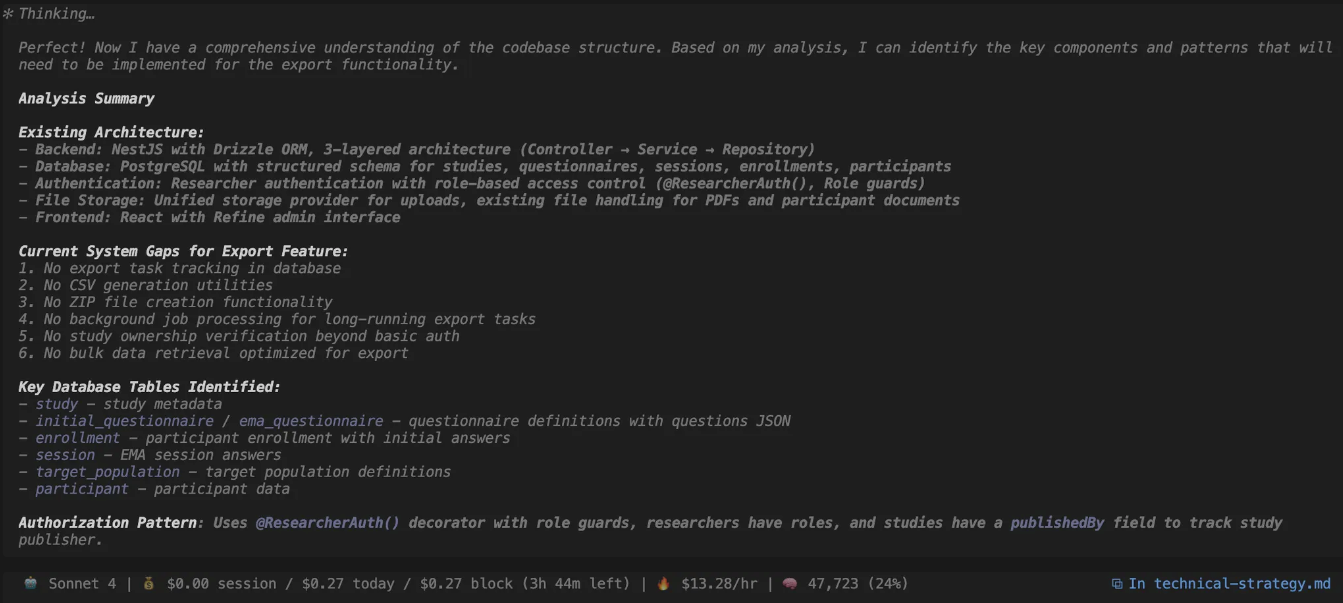

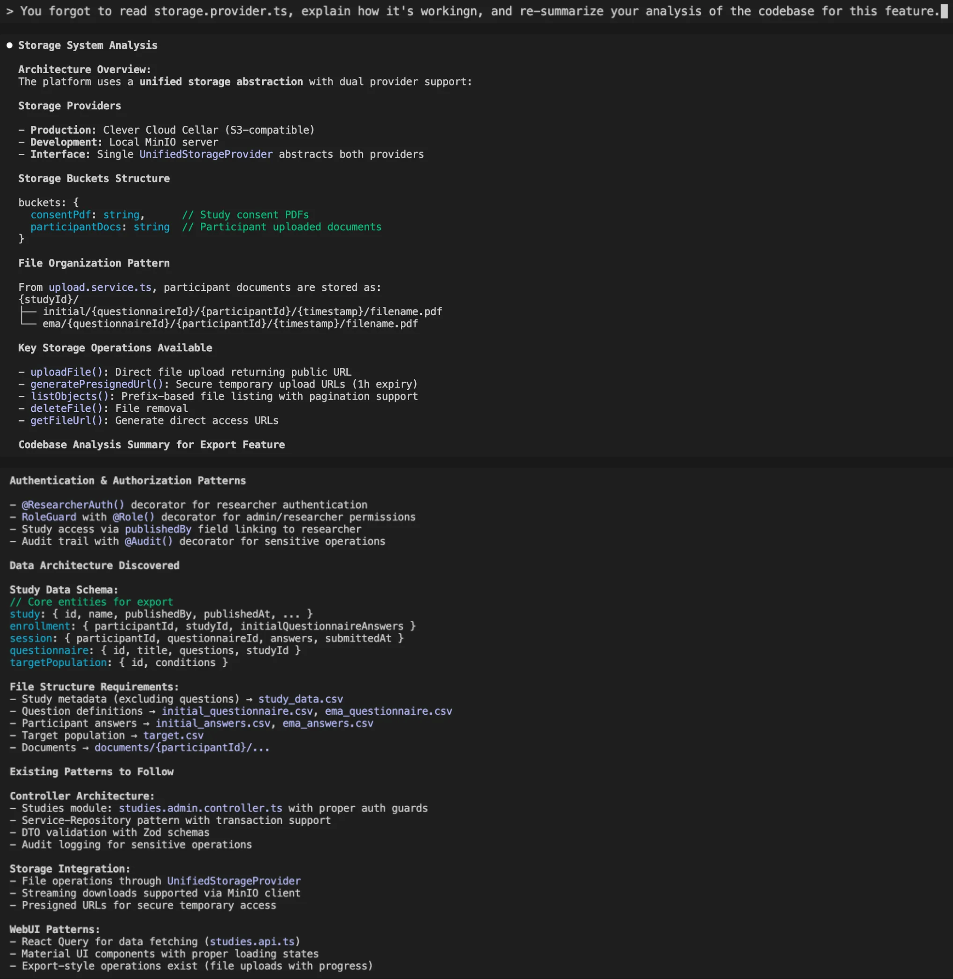

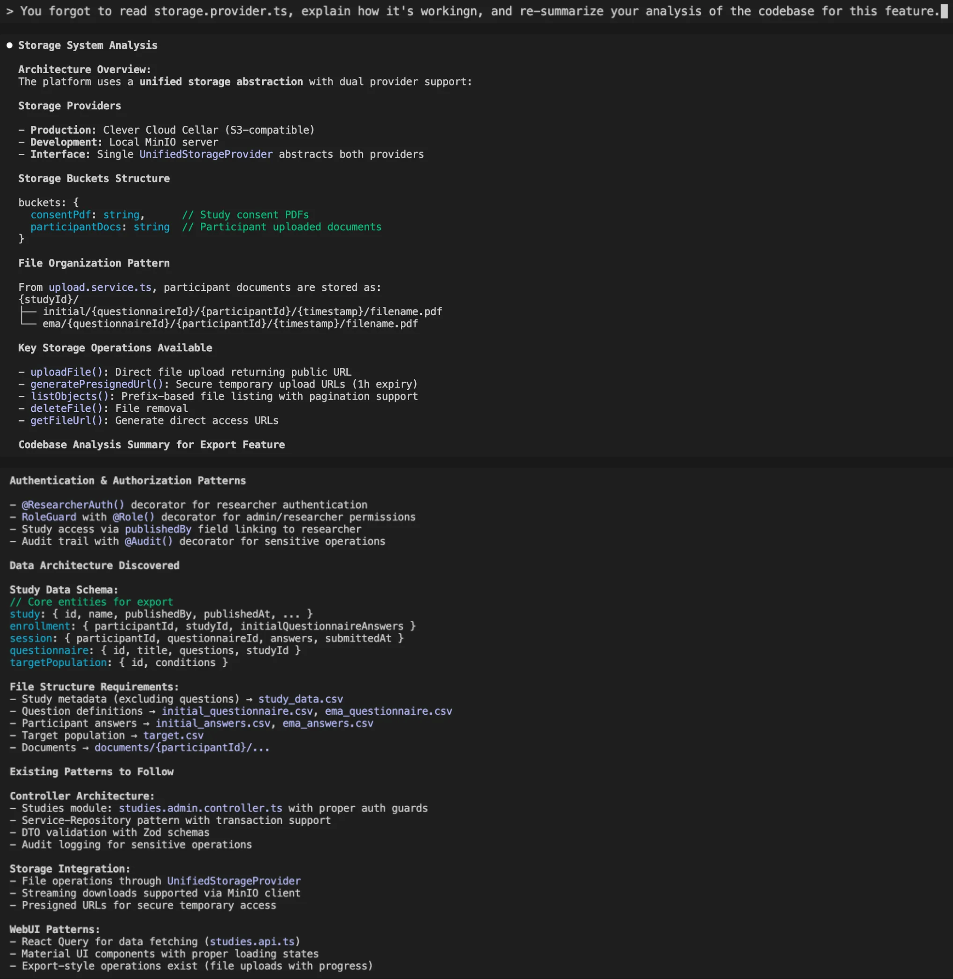

In this example, context window is healthy at the moment (24% used), Claude didn’t read parts of the codebase that contain technical debt or truly irrelevant files. However, a close read of its analysis shows that it never mentions the S3 bucket. If it was a junior dev, I would immediately realize he would struggle storing the exported data without knowing which storage technology we’re using.

Better 👌

Let’s move on to the discussion …

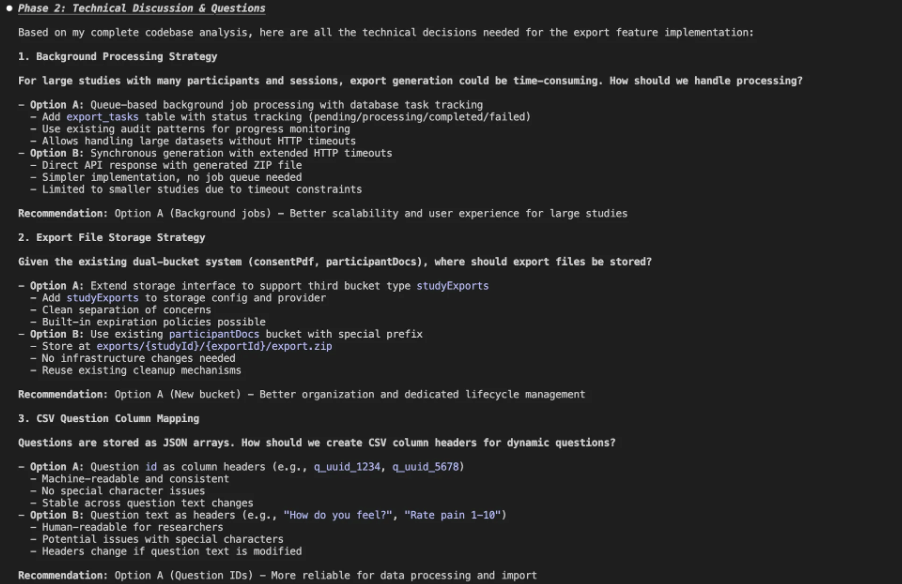

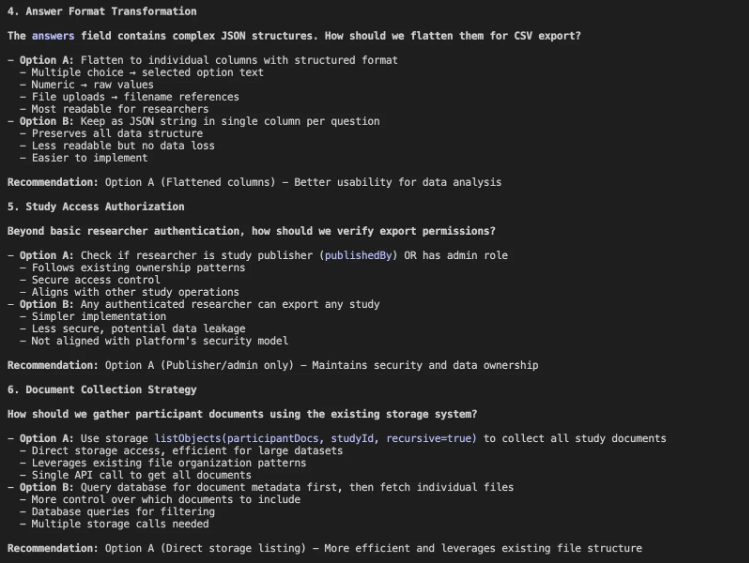

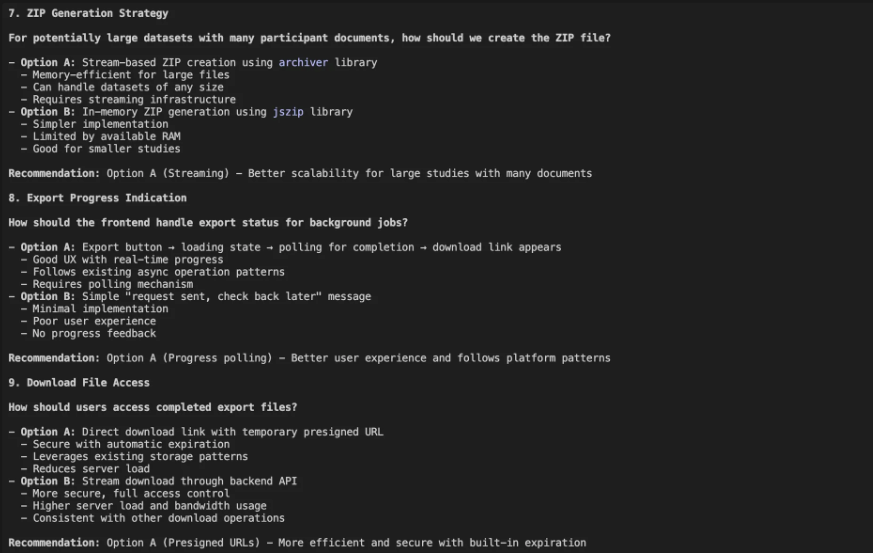

Phase 2 : Discussion 🦆

Now is the best part : Claude asking targeted questions. This is when the magic happens. Many bad architecture decisions I would have made were caught at this stage, leading to lot of avoided rework.

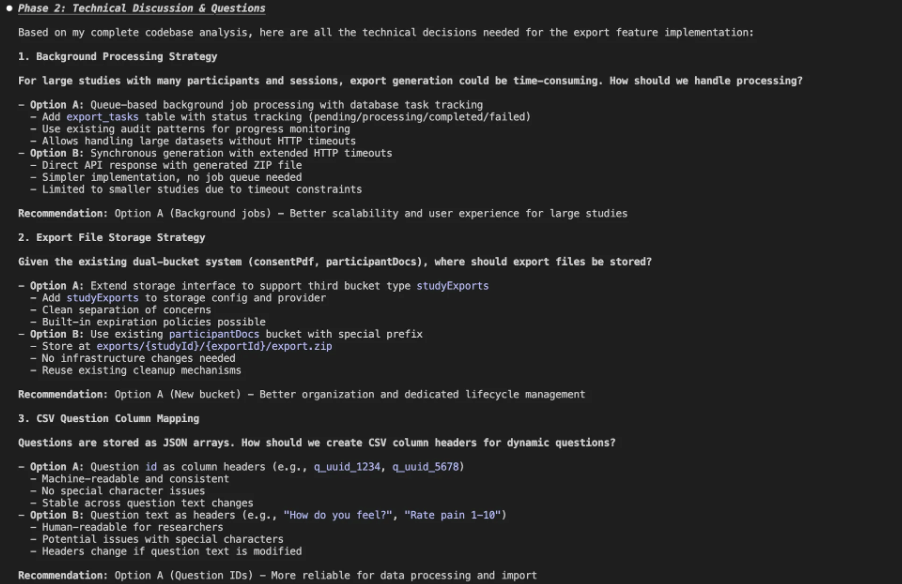

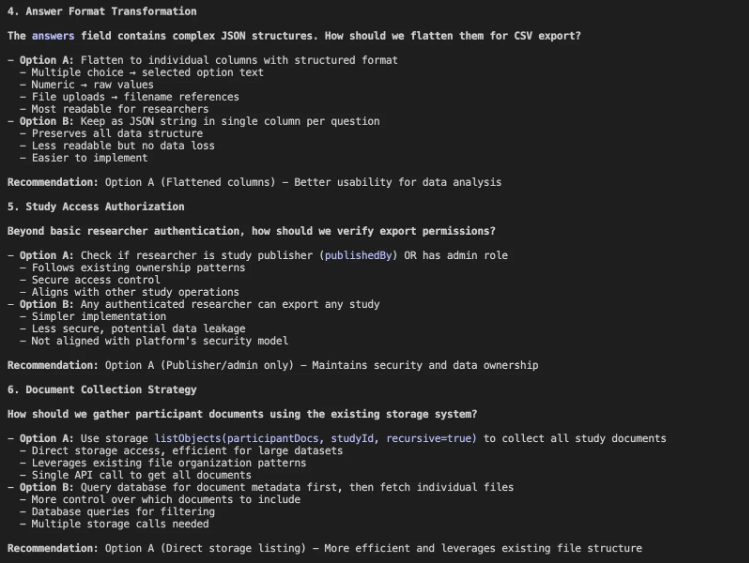

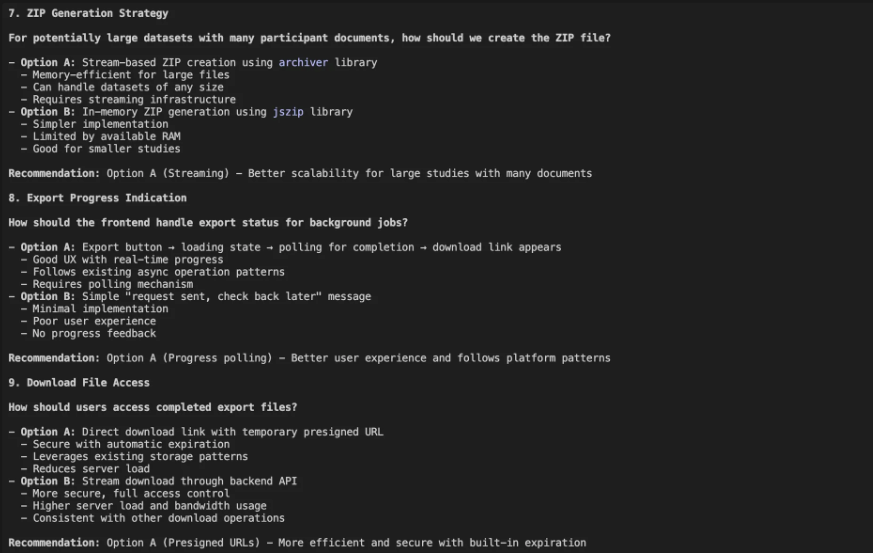

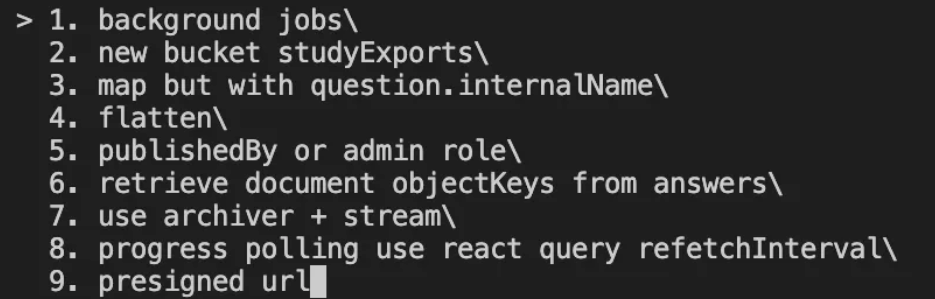

Let’s see how it works with our Export feature. Claude asked me 10 questions following its exploration.

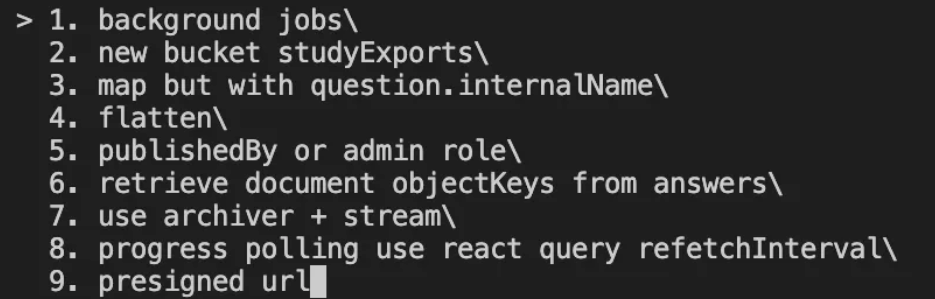

So far, so good, I was planning to set up background task and add a new dedicated bucket (Claude would have missed the bucket entirely if I hadn’t pointed it out earlier), all good.

I don’t agree with question 6. The links to the documents are already embedded in document-type answers. Listing files from the buckets could lead to missing or pulling the wrong documents. If I hadn’t clarified that, it would have turned into unnecessary rework once the feature was implemented.

Question 7 is excellent ! I hadn’t considered the sheer size of participant uploaded images, videos … so streaming from bucket to bucket is essential; otherwise a naïve in‑memory approach would have bled server memory and caused timeouts. The archiver pattern is the safest route; it lets us start writing the ZIP before all files are fetched, and we can still apply compression on the fly.

Had I ignored this nuance, a defect would have surfaced only after ingesting a few weeks’ worth of real data. By flagging it now, we avoid that rework and keep the export service horizontally scalable.

I did a few rounds of questions, answer to move on to the next phase : generating the technical strategy document.

Phase 3 : Technical Strategy Generation

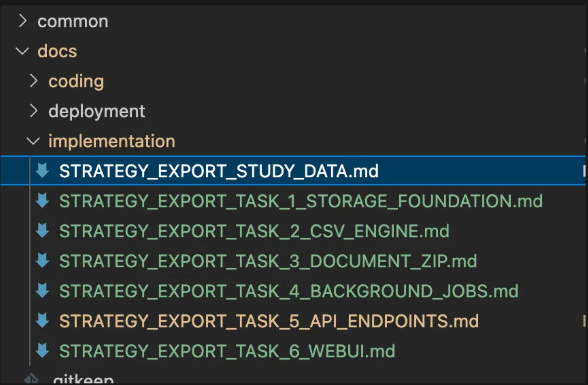

In this phase we turn the high‑level discussion into a concrete, actionable plan. The goal is to produce a short but precise markdown document that an LLM can follow without ambiguity.

My Review Checklist

Verify that every line is correct and unambiguous. It can be tempting to read superficially but keep in mind every token has an influence on quality.

Ensure the document stays under ~200 lines; if it exceeds, split it into logical sub‑tasks.

Confirm that the LLM can parse each section without needing extra context as this is the only context Claude will start with to implement the feature

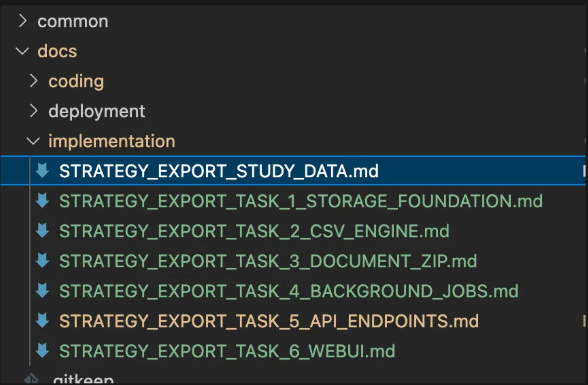

As this Export study features is quite big, I splitted it in 7 files : 6 individual task that are each testable. To start a feature

How is it different from using Claude Code Plan mode ?

Once again it’s all about context engineering. When using Claude Code built-in plan mode, you can’t control every token that reach the LLM.

By crafting a custom template, you gain two advantages:

Predictability : the model outputs a plan that adheres to your specific project, business constraints, and physical realities, leading to more reliable outcomes.

Learning : iteratively refining the template teaches you how to design effective system prompts, sharpening your intuition for prompt engineering.

For most tasks I recommend avoiding the built‑in Plan mode unless you’re tackling a completely new problem where a generic plan is all you need.

2. Put strong guardrails to guide Claude code Right First Time

For this part I couldn’t think of a better metaphor than this :

Your best chance to strike is the plan, but adding some guard rail will definitely avoid you the gutter.

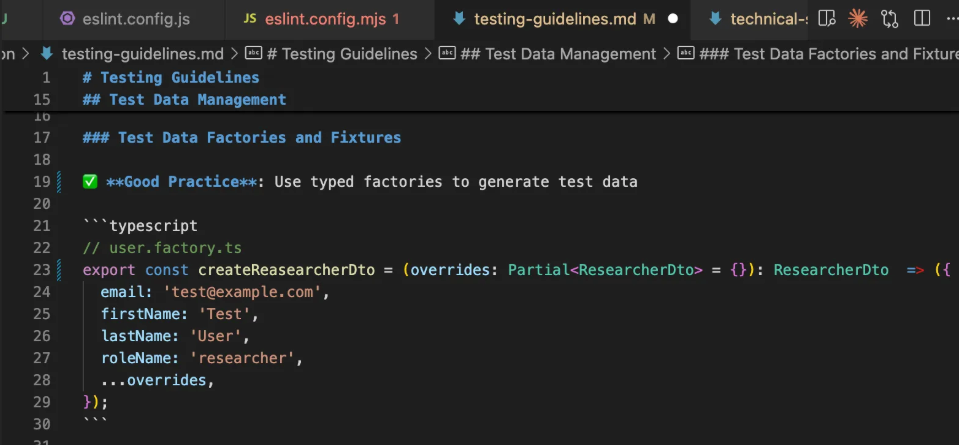

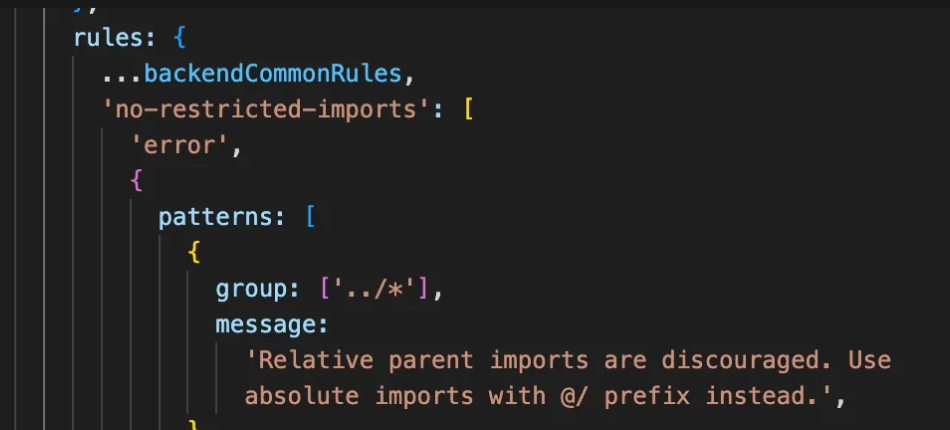

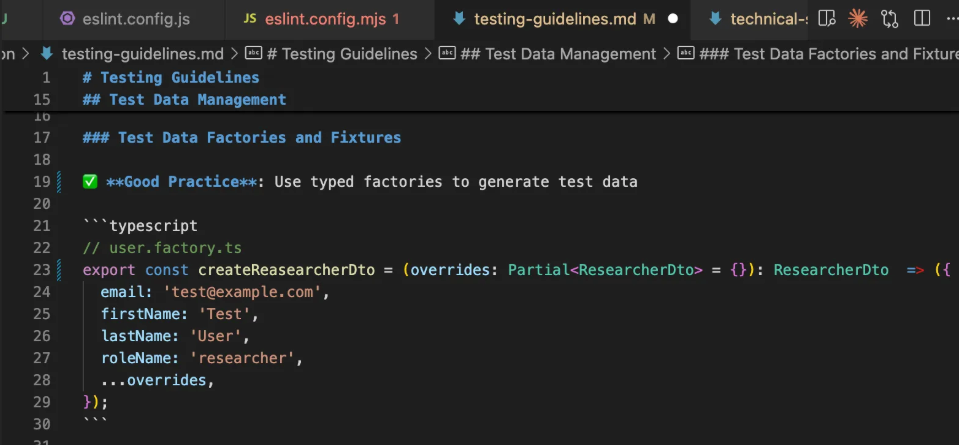

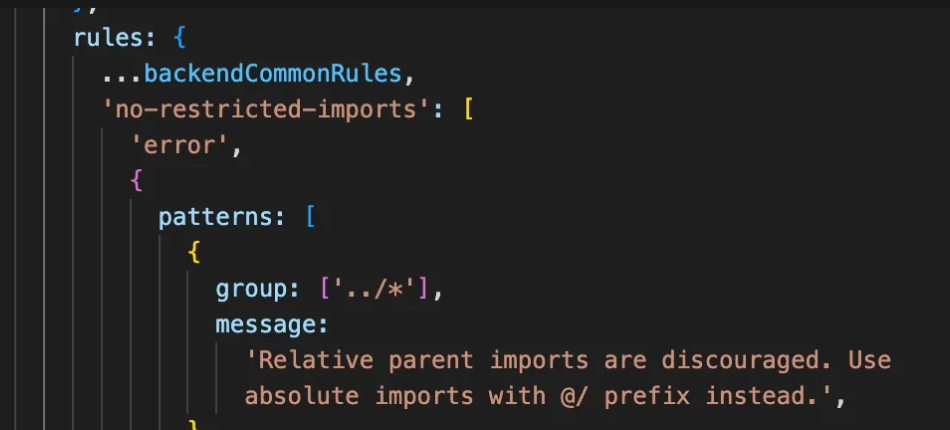

Coding standards

You already have a set of implicit rules about what good code looks like. Claude can’t guess those rules, so you need to spell them out explicitly—unless your codebase is already so mature that every file Claude reads automatically follows the standards.

So the idea is to write down markdown files that explain in clear english what good code looks like according to you.

You can then refer to these markdown files in your technical strategy document, depending on the task ahead

Prefer positive phrasing over negative phrasing—Claude tends to produce better results with the former. For example, say ”Tests files MUST define a factory helper method to create test data” Rather than “Don’t redefine a new test‑data object in each test.”

By experience we see that the real challenge is keeping those rules manageable as your codebase and team evolve, so keep them minimal. If you manage to keep it lean, coding standards won’t impact much the context window usage.

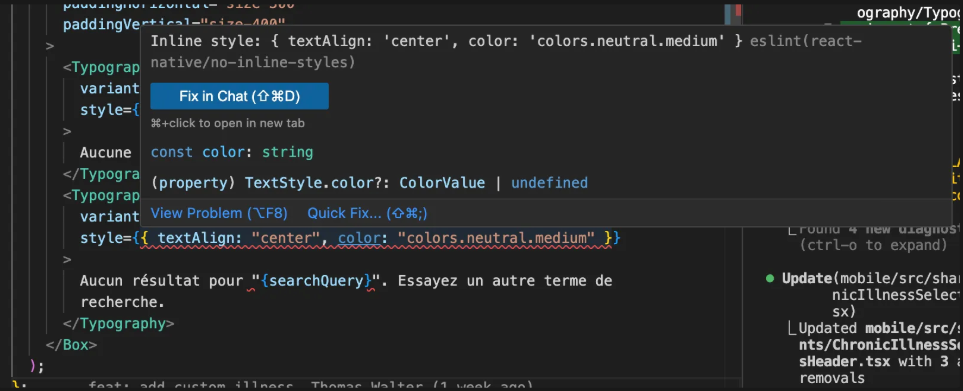

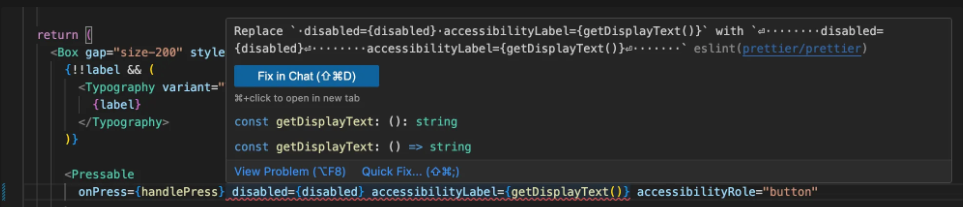

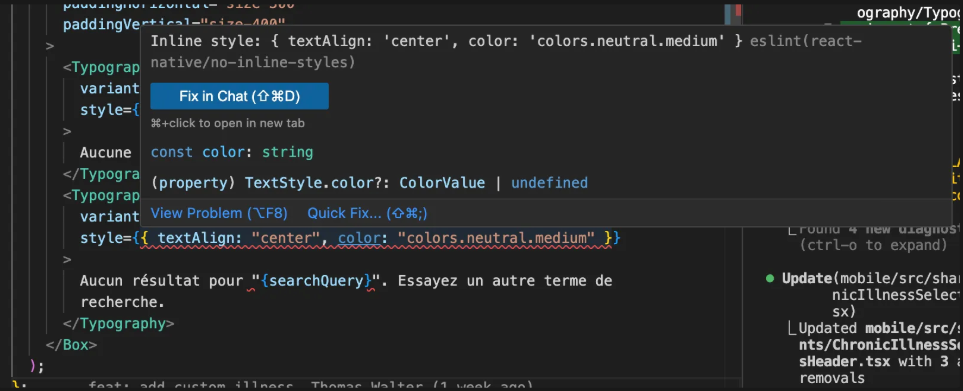

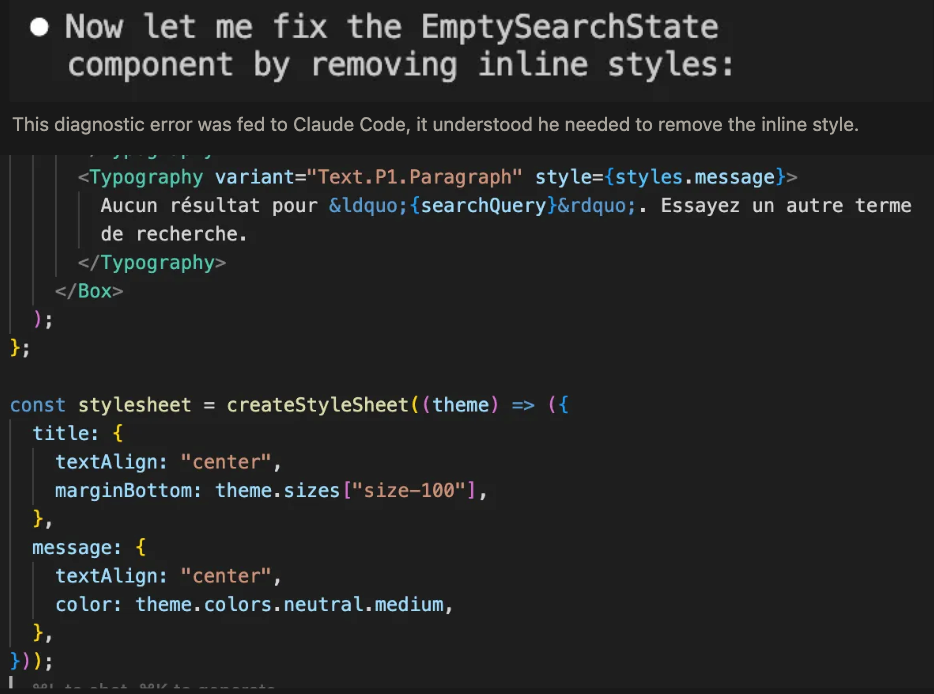

Linter & typing

A robust linter has always been a cornerstone of code quality; now it’s worth investing even more in one. As every diagnostic error is fed back into Claude, if the error message is clear enough he will happily correct it - at the expense of filling up part of the context window. This makes linting slightly more “expensive” than coding‑standard checks, but the payoff in quality is well worth it.

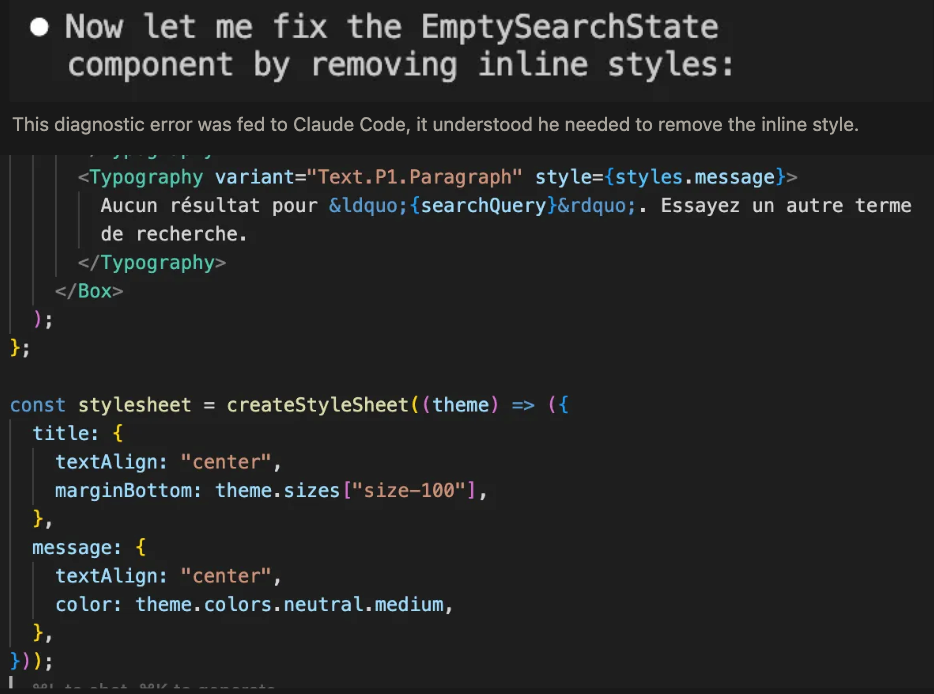

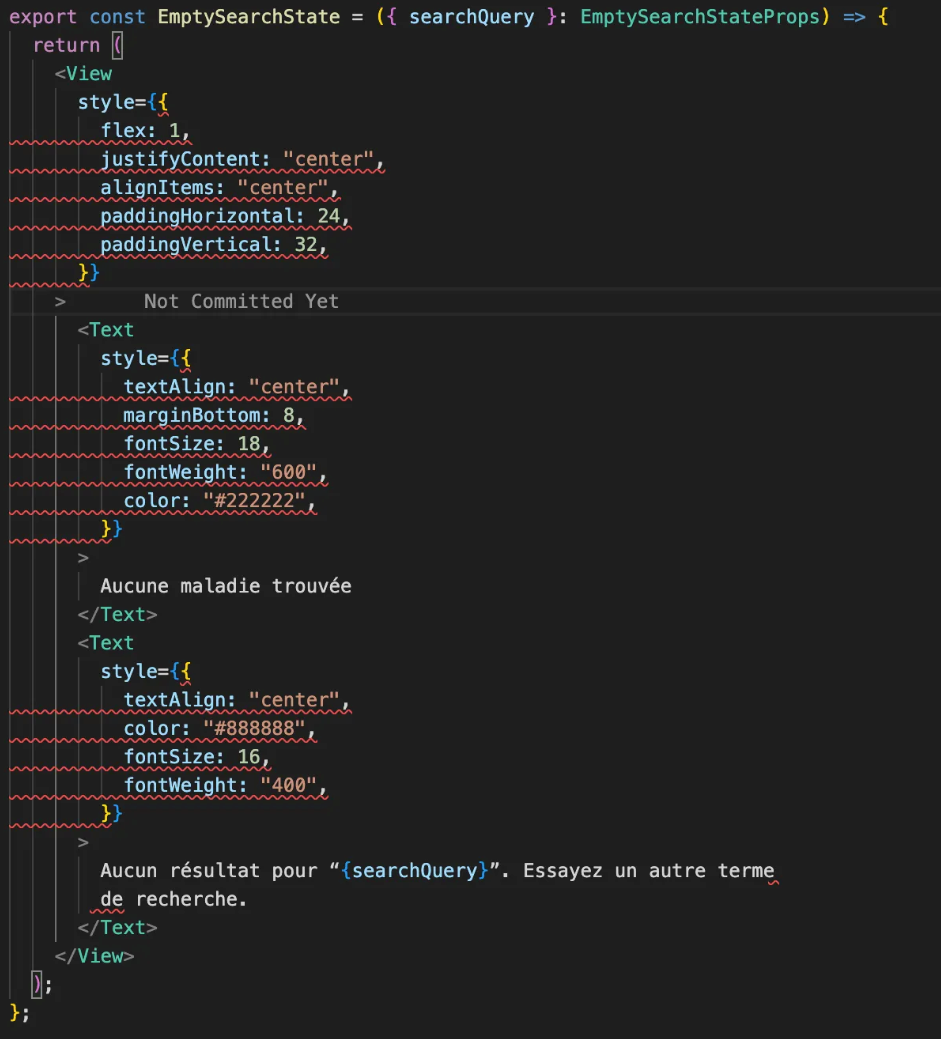

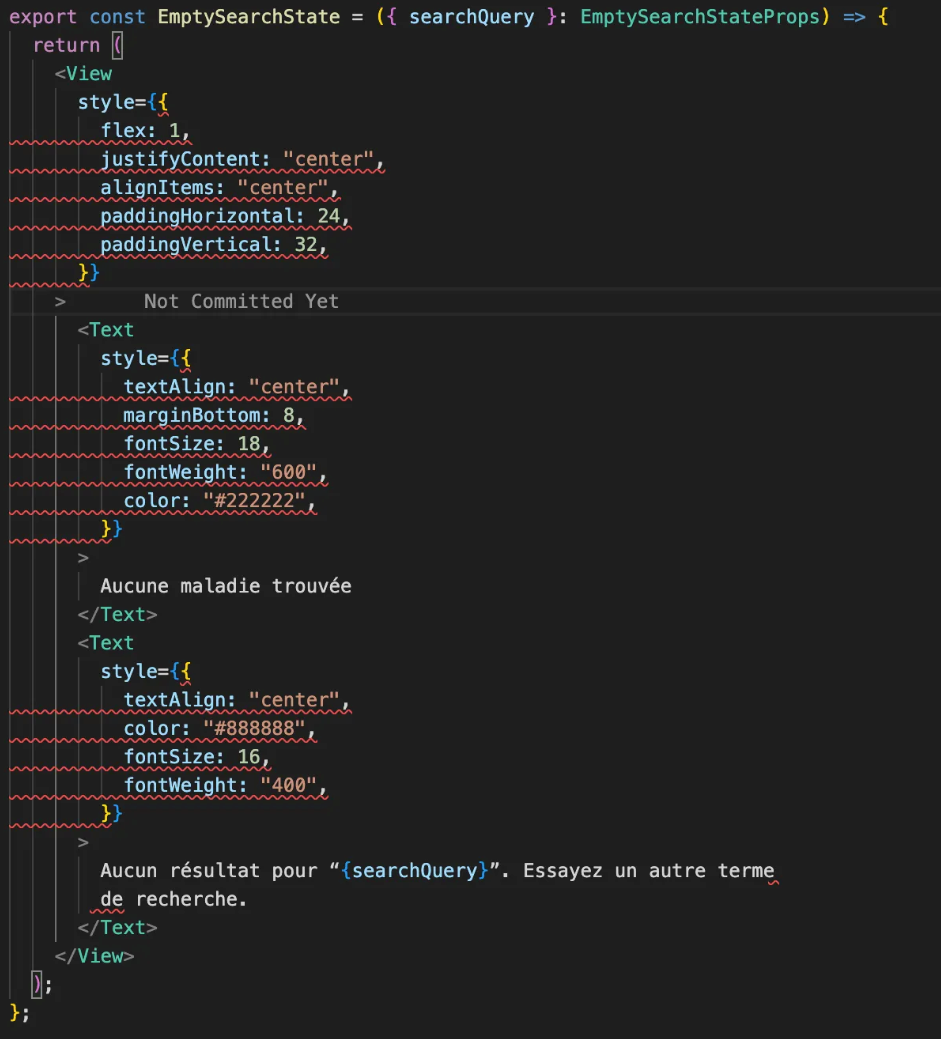

Claude wrote EmptySearchState.tsx but didn’t follow our coding standard to use stylesheet instead of inline-style which triggered eslint react-native/no-inline-styles diagnostic error.

On the second iteration with this additional context he introduced a stylesheet, which is what we wanted in the first place. It cost 2% of the context window

This mechanism also works with typing. So having robust types and strict typing configuration will enable Claude to write better code without you to correct it.

If Claude struggles to fix a linting error you can check the error message. As a general rule of thumb make sure the error message explains what the issue is and a way to fix it.

A good error message explain the issue and also a way to fix it.

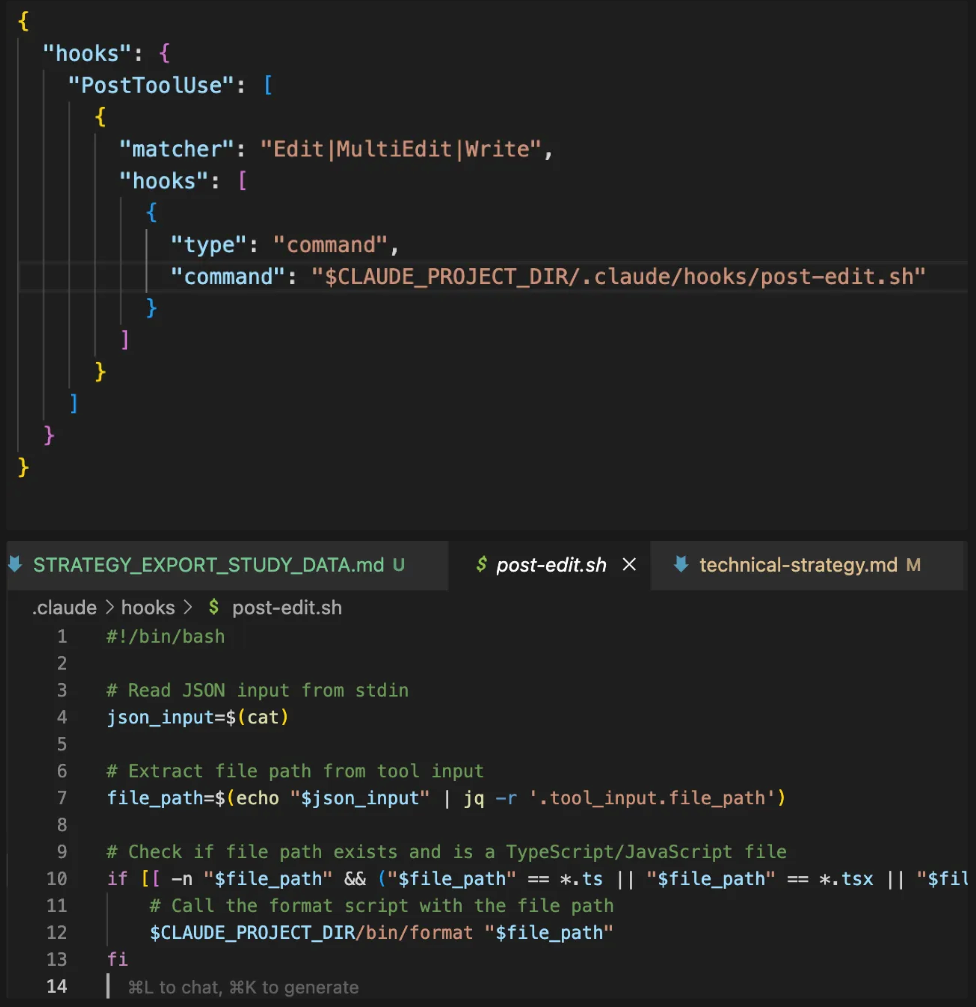

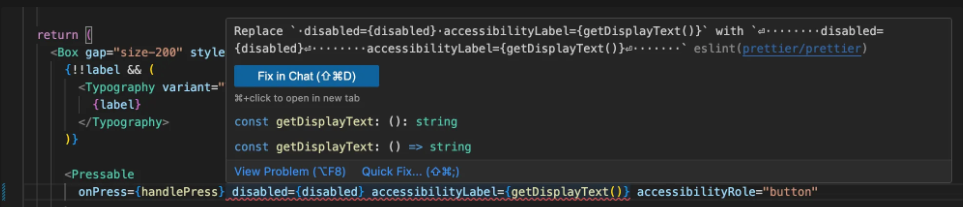

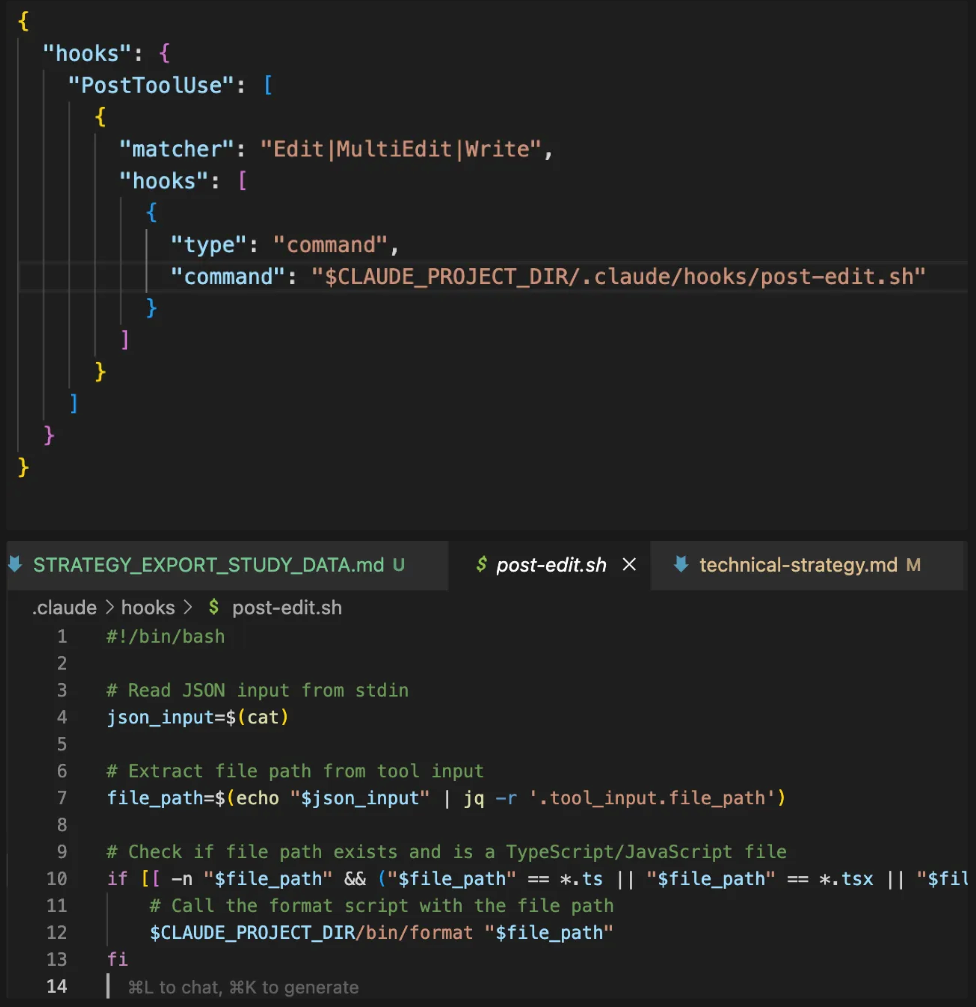

Formatting & Claude hooks

Now a common issue that eats a lot of context space is code formatting. Generally Claude won’t follow your prettier strict configuration. As a result it will generate a lot of diagnostics errors

Prettier is configured to have one prop per line

Using Claude hooks you can execute prettier autofix after he wrote the the file.

This pre‑processing step saves valuable context‑window space and reduces overall turnaround time.

Test-Driven-Development

Now the last part to make sure Claude will write code that works Right-First-Time is to employ a TDD workflow. I usually start by asking Claude to draft the tests files, review them myself, and then let it run the implementation by executing the tests autonomously.

This approach offers a triple advantage:

It guarantees that your code is tested.

The code Claude writes is inherently testable.

It gives you, as the architect, an opportunity to build a robust testing system.

For example, I introduced a test‑environment helper that starts a PostgreSQL container, boots a NestJS app, and seeds the database with minimal boilerplate. This reduced the amount of code Claude had to write for a backend test, reduce the context window usage, while keeping everything fully testable.

Summary

The final outcome for the Export Data feature was that it was designed, developed, validated, and pushed to production in just 2 man days, without a single bug. By contrast, the team had originally estimated it would take five man‑days without LLM agents support.

Summary of the Right First Time Agentic Coding method

3. Right First Time or … Jidoka !

Now what Lean Tech teaches us is that every problem is an opportunity to learn, and to improve our daily working conditions.

So when the agent falls short implementing a feature Right-First-Time, the tempting shortcut is to hand-craft the missing bits yourself. That shortcut steals a precious chance: you’ll never uncover why the LLM failed, you won’t sharpen your intuition about how agents actually work, and you’ll miss the opportunity to iterate on—and ultimately improve—the feature factory you’ve built.

Rule of thumb:When an agent can’t finish a task, investigate why. Don’t just patch the output.

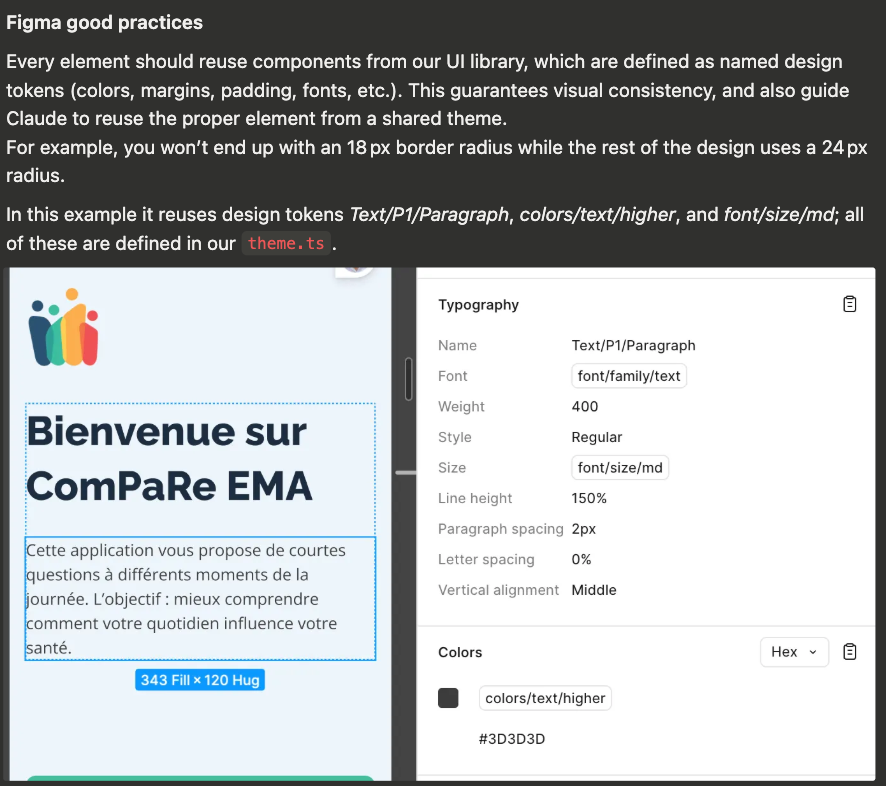

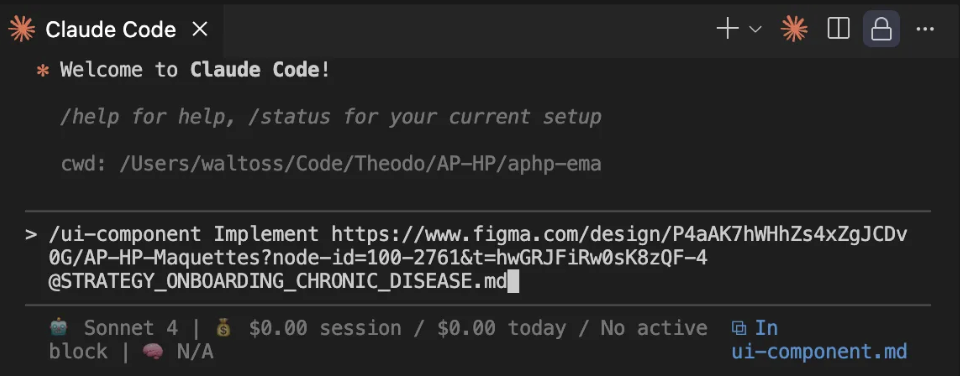

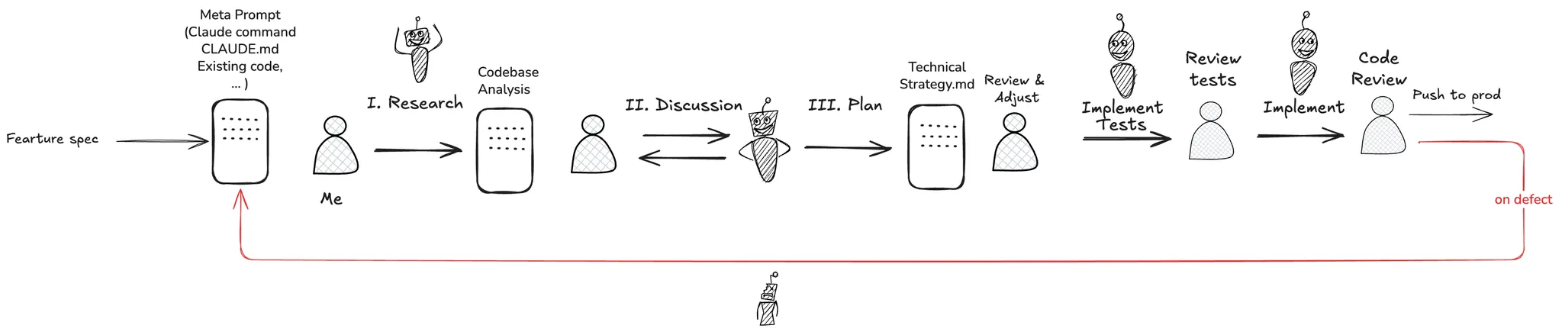

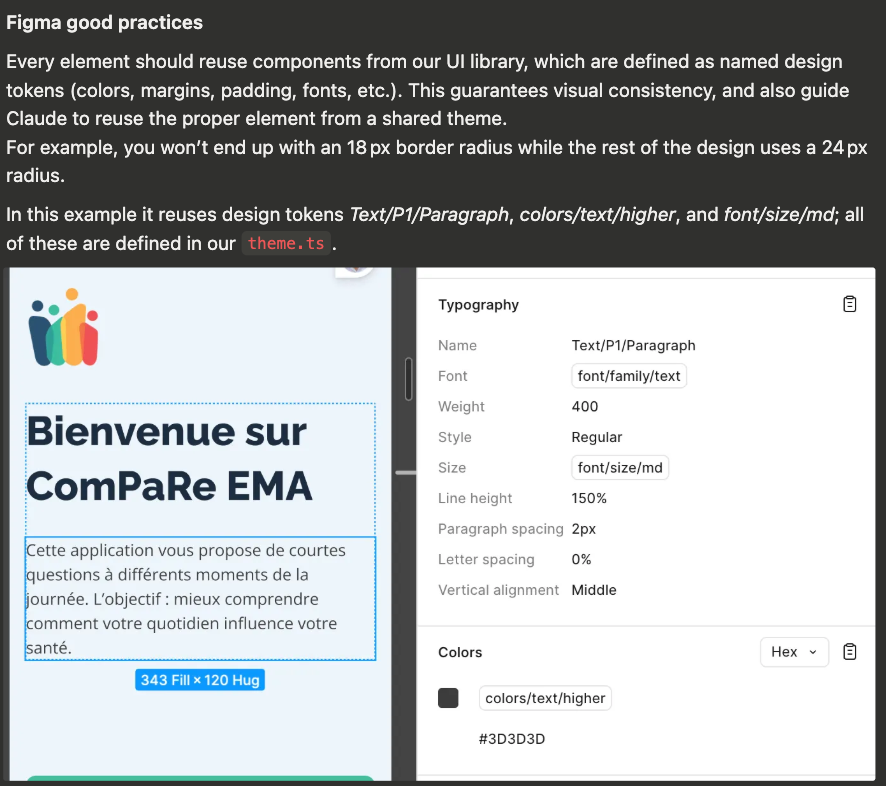

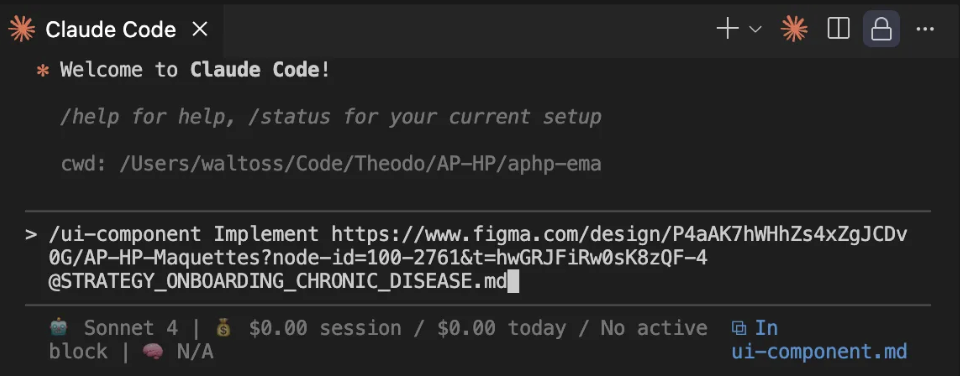

The /ui-component command

To illustrate this, I built a Claude slash command called /ui‑component.

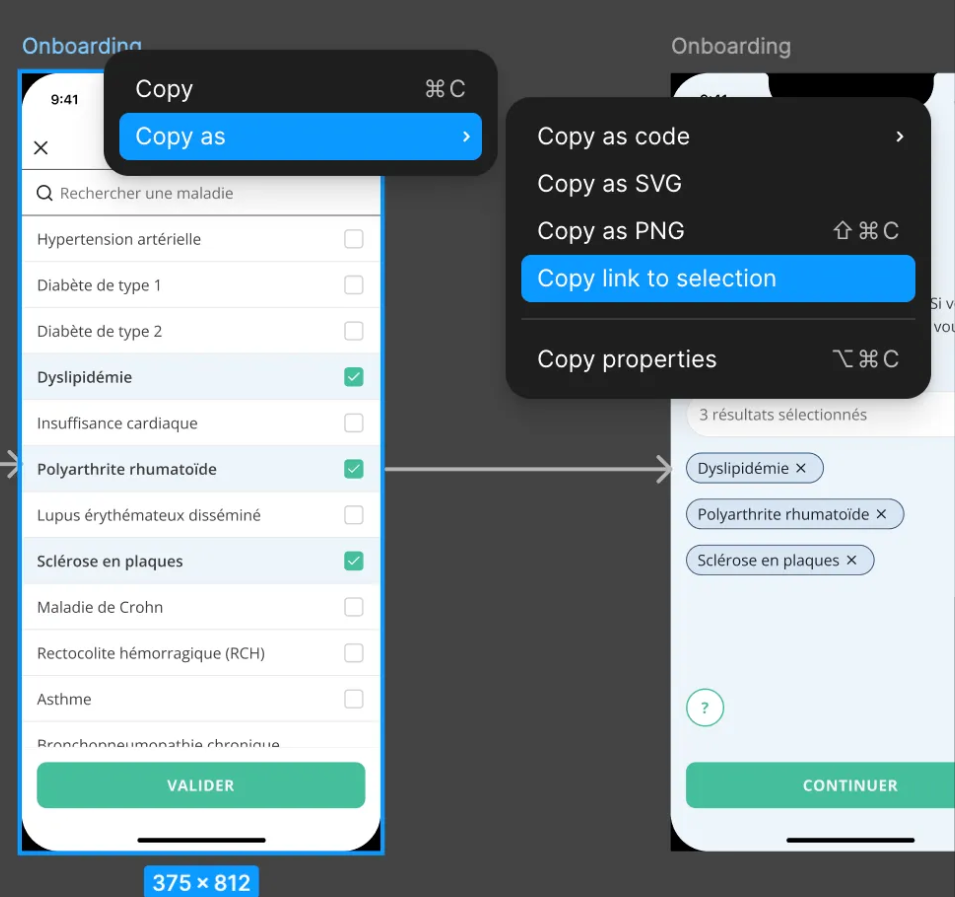

Like many other it connects Claude to this project’s Figma via one of the many available MCP servers. This command accepts a Figma screen link and a technical strategy document. It’s goal is two-fold :

to replicate the pixel-perfect screen

but also to follow our project standards : reusing existing component, applying theme colors sizes and typographies, using translations keys for english and french, …

To start implementation of the given screen, I should need to copy its link

And give it to claude, which will use the MCP tool get_figma_data_tool

that retrieves everything that define this screen (layout, design tokens, names, properties, …) as JSON.

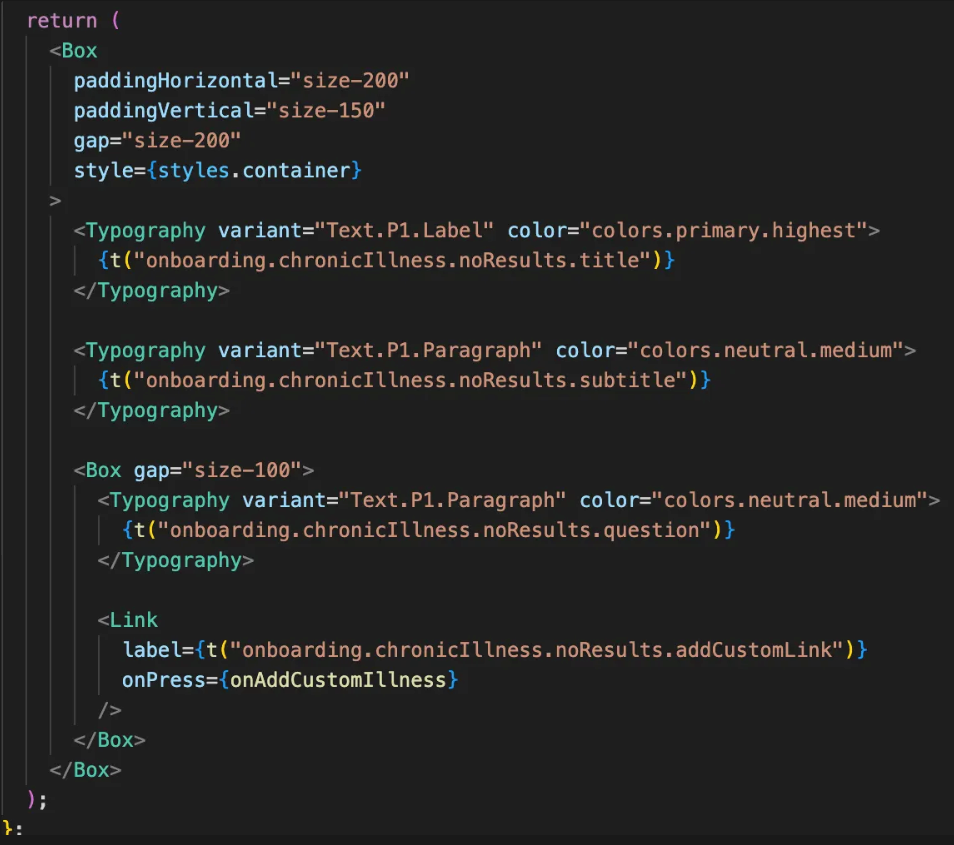

On this example, Claude first pass generated

My first attempt was to hard‑code a bunch of rules and example files, but Claude kept slipping up. Either it was missing a component that wasn’t in its context (e.g he uses react native <Text> component instead of <Typography> from our design system), or the 200k context window blew up and Claude ended up looping until failing (probably due to the ≈ 60 k tokens from the Figma export)

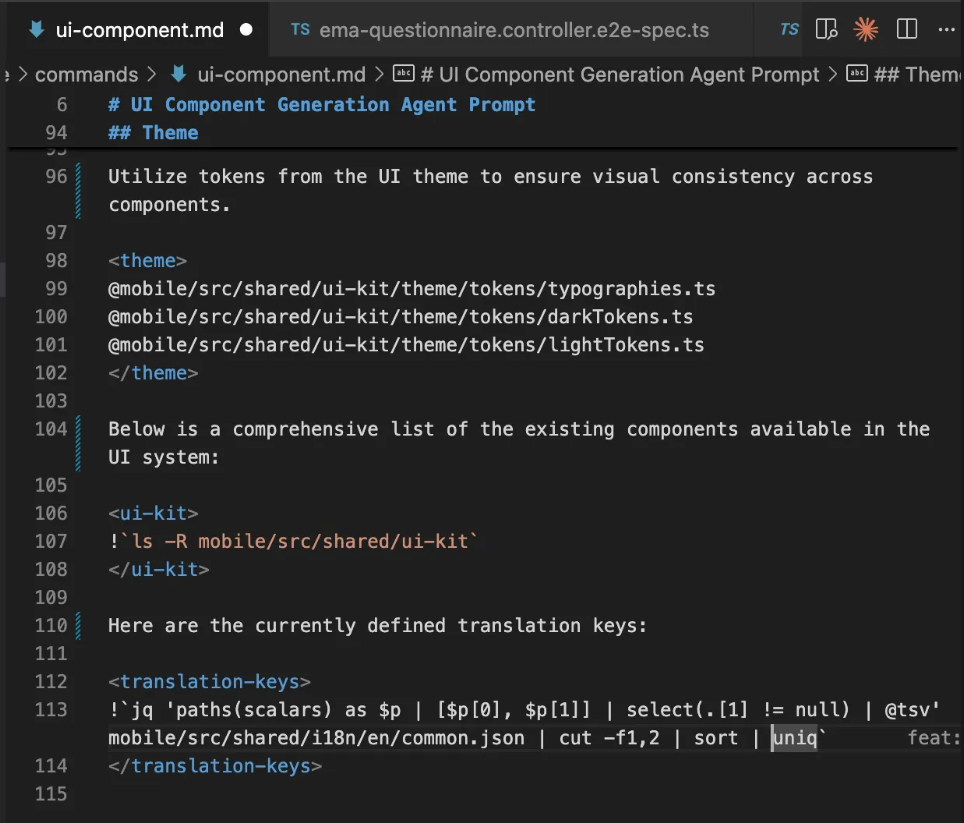

I solved this problem with two ideas embedded in my ui-component slash command :

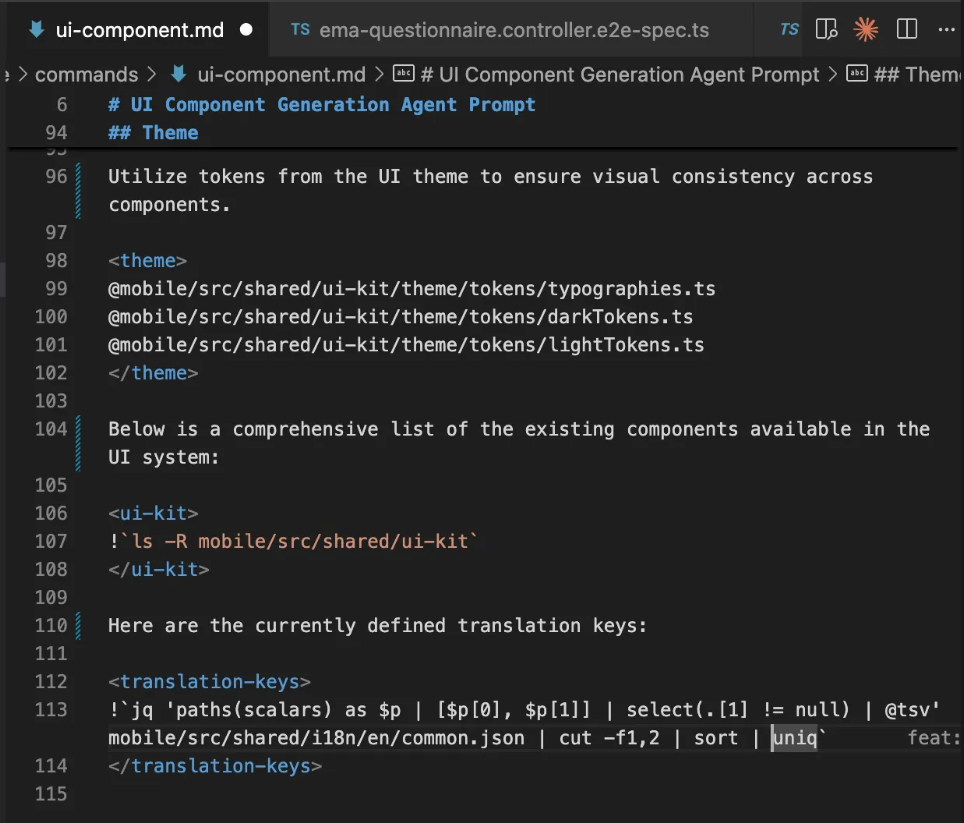

Inject needed files instead of letting Claude discover them – I used @ directives to pull in the theme files directly injected in the prompt before sending them to Claude. It avoids Claude searching and opening multiple files to have context on the theme and available components in our UI system.

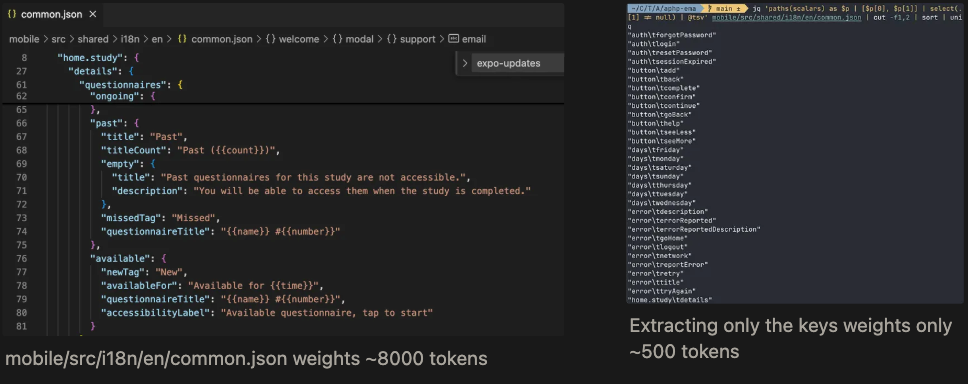

Extract informations from large files using bash – instead of loading entire i18n bundles (which would again overflow the context), I leveraged Claude’s !<bash>`` syntax that executes commands and stitch the stdout into the prompt before the LLM sees it.

For example:

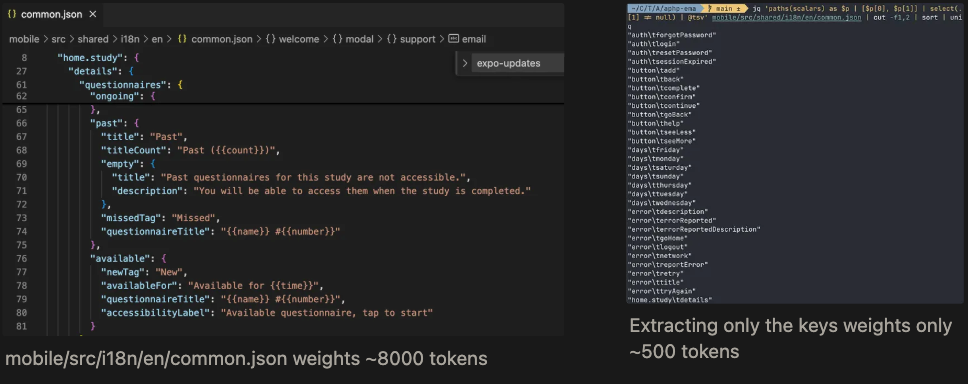

jq 'paths(scalars) as $p | [$p[0], $p[1]] | select(.[1] != null) | @tsv' mobile/src/shared/i18n/en/common.json | cut -f1,2 | sort | uniq

This single line outputs every translation key in a two‑column TSV (~500 tokens), without dumping all the actual translations into Claude’s context (8000 tokens).

The result: a lean prompt that keeps the model focused and avoids token bloat, while still giving it everything it needs to generate a pixel‑perfect, standards‑compliant UI component.

The result is a fully-featured component coded in under 5 minutes, without even looking at my Figma file.

Ji‑doka is one of the two pillars of the Toyota Production System that inspired the Lean Tech Manifesto. It teaches us that when a defect appears on the production line, it’s better to stop the line, identify the root cause, and fix it before resuming.Applied to agentic coding, this means pausing Claude, adjusting your meta‑prompt (slash commands, existing code, coding standards, linter rules, etc.), resetting everything, and restarting. By doing so you build step by step an AI‑powered factory that ships entire features Right First Time.

Work as a team : Invest in a AI-learning organization … or an organization learning AI

I want to emphasize that it’s perfectly normal and healthy, to fall back on writing the code yourself. There are times you’ll want to step back and manually polish the few remaining errors that prevent a feature from being finished. Plus, you don’t want to forget how to ride a bike right ?

Now in a team setting, I recommend having everyone log any defects that arise when Claude fails to deliver a right‑first‑time implementation. Then, set aside a short, dedicated slot each day to review those failures together and experiment with improvements.

This practice turns your team into a learning organization that knows how to react when an LLM doesn’t produce the exact result you need.

In a world where a new model, a new IDE, a new CLI, a New framework appears every week, as a Engineering Leader, the best investment you could do is invest in your people. By having the whole organization learn how to use this new tools in their daily work, you have a better chance of getting ahead then spending time negotiating contracts with the best LLM provider that will be dethroned in two months.

Conclusion

First big up to the team to achieve this very intense project : Thibaut, Hélène, Aurelien, Rémi, Matthieu, Jade, Anne & Clement 👏

It’s hard to imagine that software development will not be entirely transformed in coming years, with agents becoming an integral part of our daily workflow. These tools can either eliminate the need to type on a keyboard or it can accelerate learning of deep technical concepts, enabling us to build better, more performant and secure products and open up new possibilities for rapid iteration with users, ultimately delivering them a better overall experience.

At Theodo we nurture that mindset by using agents first and foremost to build more ingenious technical conceptions. This encourages continuous deep learning while cultivating an intuition for how agents can help us build better products for our customers and solves our society problems.

What about the cost ? Pulling 15 complex features cost me roughly €500 on Claude usage. It is a notable expense yet very modest compared to the man-hours saved on the engineering side. Whether you see it as expensive or worthwhile depends on your budget, but in practice the ROI is hard to ignore.

PS : this article was extensively reviewed and refined with the help of openai/gpt-oss 20B 🙏

L'article complet ici : https://m33.notion.site/How-I-Shipped-2-Months-of-Features-in-3-Weeks-With-LLM-Agents-and-What-It-Means-for-Engineering-Lea-2768f3776f4f809d8793f68a7ca4402a

Rédigé par Thomas Walter